In this brief overview, we’ll try to answer the question: What is WebRTC?

This guide will cater to laymen who want to know how WebRTC handles audio, video, and data in their browsers. As a result of those descriptions, it will also provide insight about how WebRTC powers the VirtualPBX Web Phone.

Click the navigation links below to jump ahead to any section.

WebRTC, an active project since 2011, is an acronym that stands for Web Real-Time Communication. It helps web developers easily create applications that allow users to reach each other through audio, video, and data (like text messages) sent through web browsers. Since its inception, it has gained adoption in many popular web browsers and has created a revolution in browser-based communication.

Prior to its release, web browsers were only able to natively handle the display of web pages written primarily in HTML and Javascript. The sharing of real-time audiovisual materials between two or more users was not part of their code; that task was left to browser plugins and operating system-specific software.

Programs like Skype allowed individuals to engage in live voice and video chats. The use of Skype required users to download an application that was built for their device – a Windows desktop or Android phone, for instance. Although this may seem similar to the popular Google Meet of today, users of Meet can expect to communicate by simply visiting a website in a browser that supports WebRTC protocols. There is no separate program necessary to download.

Both Skype and Meet use the internet to handle real-time communication. The primary distinction for end users is that Skype runs as a separate application and Meet runs within a website.

WebRTC’s revolution reflects the split between native application and website application. Although WebRTC is reliant on browsers that support its construction, and therefore reliant on browsers being crafted to run on devices like desktops and smartphones, an overall public enthusiasm for using web-based applications has made audio and video communication through browsers expected as a stock feature. In short, the public is more reliant on web applications than ever before.

The following parts of this document will describe how the WebRTC protocol achieves the feat of natively handling audio, video, and data in a way that’s accessible to users and interoperable for developers.

It’s important to note that WebRTC as a brand is different from the concept of web-based real time communications.

WebRTC is maintained by Google engineers. The code that the WebRTC protocol uses is supported within Chrome, Firefox, Opera, and other browsers.

Although the idea of web-based real time communications has become synonymous with the WebRTC brand, there have been other implementations that found support within major browsers. One popular example, although it’s no longer maintained, is OpenWebRTC, which promoted the OpenH264 codec for video and used the GStreamer multimedia framework to read and process data streams.

WebRTC (from Google) supports multiple audio codecs, including Opus as its default, and H.264 for video (which is different than the OpenH264 development referenced above).

What you should know about this document is that any mention of “WebRTC” refers to Google’s brand of the general web-based real time communications concept. It is the most widespread and popular of the implementations. However, the underlying procedures discussed here can be understood to function in a similar manner within other implementations.

video tag to accept a video stream from a user’s webcam.

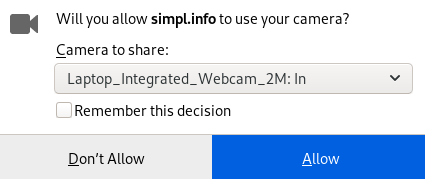

A lot of underlying code makes this possible. What’s here is just a bit of Javascript (mostly the getUserMedia API) that tells the web browser to ask a recipient for permission to use their video feed and then, with permission granted, sends that person’s feed into a container on a web page. A similar block of code would request a user’s microphone.

Any time Google Meet prompts Chrome, Firefox, or Opera to allow use of your camera, this is what’s happening. The developer has to put forth relatively little effort because the getUserMedia API knows how to speak to the browser and access the user’s devices.

A lot of underlying code makes this possible. What’s here is just a bit of Javascript (mostly the getUserMedia API) that tells the web browser to ask a recipient for permission to use their video feed and then, with permission granted, sends that person’s feed into a container on a web page. A similar block of code would request a user’s microphone.

Any time Google Meet prompts Chrome, Firefox, or Opera to allow use of your camera, this is what’s happening. The developer has to put forth relatively little effort because the getUserMedia API knows how to speak to the browser and access the user’s devices. var connection = RTCPeerConnection(config);async openCall(pc) {

const gumStream = await navigator.mediaDevices.getUserMedia({video: true, audio: true});

for (const track of gumStream.getTracks()) {

pc.addTrack(track);

}

}var peerconnection = new RTCPeerConnection();

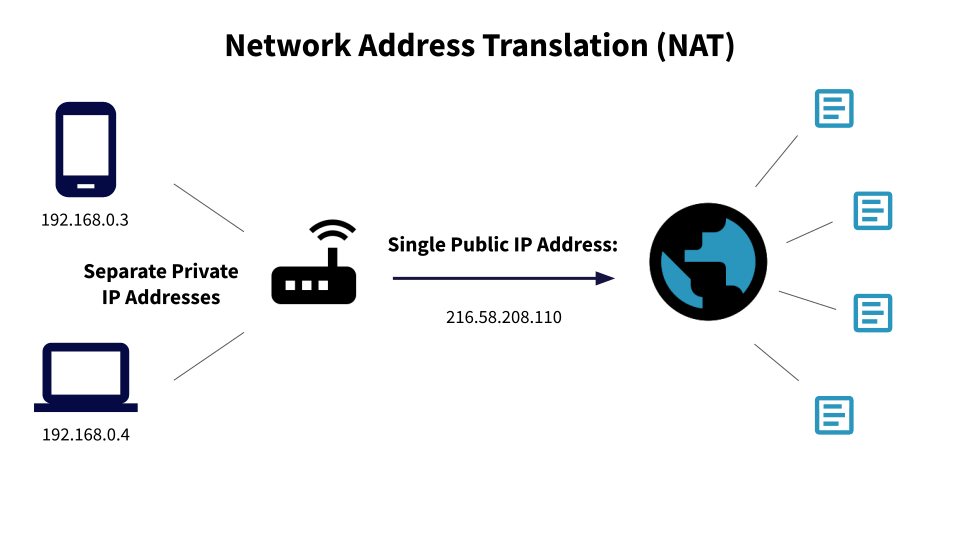

var dataconnection = peerconnection.createDataChannel("sendChannel");According to an article published at callstats.io, NAT was created in the 1990s to help extend the life of IP addresses – the unique identifier for devices on the public internet.

The IPv4 standard – which uses a grouping of numbers similar to 216.58.208.110 – has a limit of about 4.2 billion addresses. Many blocks of possible addresses are reserved for private networks and special functions within the internet. The reason why NAT helps extend the life of IPv4 is that it increases the number of possible devices that can use that 4.2 billion allotment.

NAT works by allowing multiple devices on a private network to use a single public IP address. To be brief: Device 1 (IP: 192.168.0.3) and Device 2 (IP: 192.168.0.4) on a private network could both use the public address 216.58.208.110 to reach the public internet because the NAT router can differentiate between the traffic that moves to and from each device.

WebRTC uses an ICE to find out the best way to connect devices with one another. It can recognize how a NAT is being used and determine whether or not a STUN or TURN server (discussed in the next subsections) is necessary to route information.

While NAT may be associated with an actual physical device (a router), ICE is more like a set of standardized instructions. WebRTC knows how to follow the Internet Engineering Task Force standard RFC 8445 to determine when and how to use external servers.

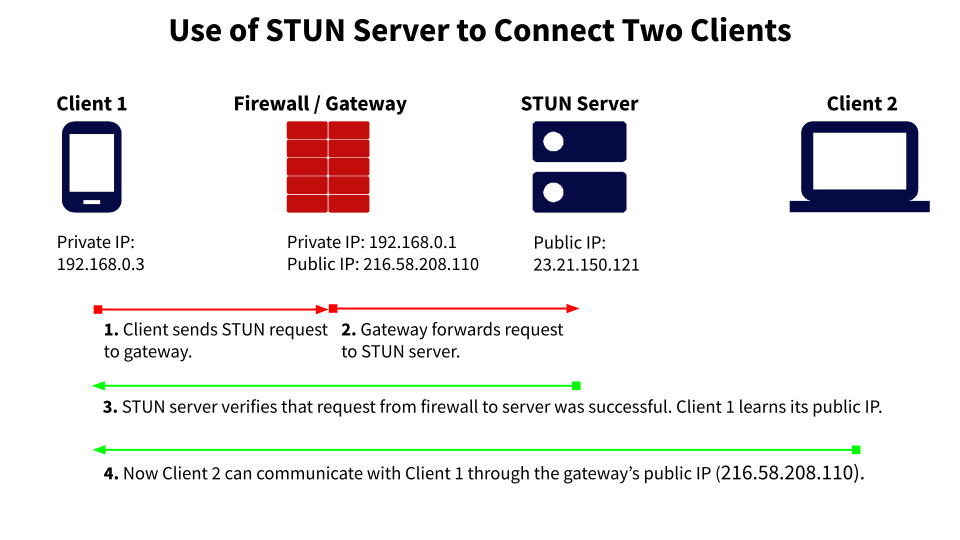

Understanding NAT is the key to understanding what a STUN server does.

WebRTC may need to interact with a STUN server (usually one that sits in the public internet) after one user device asks to connect to a second device. Between two clients, the STUN server helps the first client learn its public IP address so it can tell the second client how to reach it.

The STUN server also provides information about which type of NAT the client sits behind so communication between clients can begin.

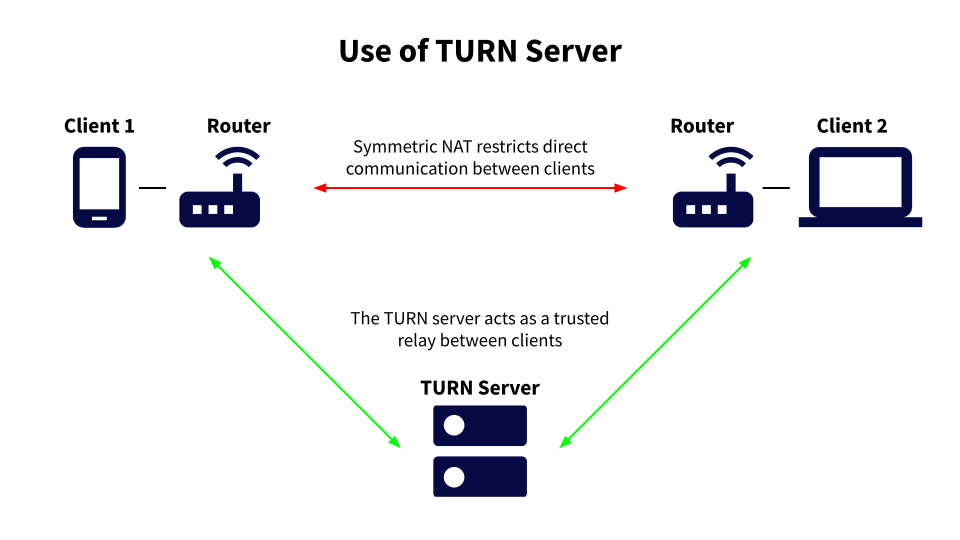

Sometimes, the router’s NAT says a device is not allowed to be accessed from a server it has never met before. This is called a Symmetric NAT restriction, and it basically says that the device is only allowed to trade information with its friends.

In this case, the ICE protocol can make use of a TURN server that sits between devices. The TURN server acts as a trusted partner in the WebRTC chain and relays all information that’s traded between the devices.

Using a TURN server can be much slower and create delay in audio, video, and data sharing. It is most often used only as a last resort when other types of connections aren’t possible.

A common topic of discussion with regard to internet-based communication is security. Individuals want to know if their data is being protected while on a video call or if their files can be intercepted while in transport through a WebRTC application.

There are a lot of facets to this discussion. While this document will not dive into them all, it will address two types of security protocols used in WebRTC: DTLS that helps protect data and SRTP that helps protect voice and video.

The following two subsections will provide basic information about these protocols, largely referenced from the NTT Communications Study of WebRTC Security. The study provides an in-depth look those security measures in addition to an overview of WebRTC as a whole.

DTLS is built on the TLS (Transport Layer Security) protocol that has been established throughout the internet. TLS is the same protection that HTTPS websites use – sites like Amazon or your local bank that process credit card transactions or other sensitive information.

The use of TLS prevents eavesdropping and alteration of information. It gives users an expectation of data privacy through cryptography and transmission reliability through integrity checks. It also makes sure the sender and receiver are valid by requiring authentication between parties.

DTLS uses UDP (User Datagram Protocol) to transmit data in a manner that emphasizes a reduction in latency. This means users can expect DTLS to handle their data quickly. And although UDP’s emphasis on latency means it allows packets of data to be dropped or reordered in transport, TLS makes up for those shortcomings with its own integrity mechanisms.

You can read more about latency and the use of packets on the VirtualPBX page, What is VoIP?

SRTP is used to protect audio and video streams. Like DTLS, it encrypts data and provides integrity checks.

The primary reason that SRTP is chosen for these types of transmissions is because it’s lighter than DTLS. It requires less overhead, so voice and video, which are sensitive to delays, can remain protected but can also arrive without noticeable lag to conversation participants.

Browser extensions have been developed by the WebRTC community to make use of real-time audio and video safer.

One particular problem with using WebRTC through an encrypted virtual private network (VPN) is that information about users can be leaked outside the encrpyted tunnel. This issue of “WebRTC leak” is discussed at length on the CompariTech blog and ip8 WebRTC Test page.

These webpages describe how WebRTC leak affects users and how it can be mitigated with browser-specific fixes and plugins like the WebRTC Leak Shield plugin. Although plugins like these may not act as a panacea — and additional measures like switching VPN providers may be necessary — the availability of community products like this shows how active the WebRTC ecosystem is and how supporting it can be.

1998-2024. VirtualPBX.com, Inc. All rights reserved. VirtualPBX, TrueACD, and ProSIP are ® trademarks of VirtualPBX.com, Inc.